30 November 2012

Cloud Tip: What do you mean by BigData and why?

Hi Folks,

BigData in simple words:

Some people think Big Data is simply more information than can be stored on a personal computer. Others think of it as overlapping sets of data that reveal unseen patterns, helping us understand our world—and ourselves—in new ways.

Still others think that our smart phones are turning each of us into human sensors and that our planet is developing a nervous system. Below, experience how Big Data is shaping your life

EACH OF US LEAVES A TRAIL OF DIGITAL EXHAUST, FROM TEXTS TO GPS DATA, WHICH WILL LIVE ON FOREVER.

A PERSON TODAY PROCESSES MORE DATA IN A SINGLE DAY THAN A PERSON IN THE 1500S DID IN A LIFETIME.

EVERY OBJECT ON EARTH WILL SOON BE GENERATING DATA, INCLUDING OUR HOMES, OUR CARS, AND YES, EVEN OUR BODIES.

More Infomation is available @ Student Face of BigData

Click on Lets go link and see how much data being generated day by day in with the help of Info-graphics.

Cheers!

Courtesy goes to : Student Face of BigData

Dev Tip: Fastest Key-Value library from Google

Hi Folks,

Today let's look into Key-Value storage library called "LevelDB".

Here is a performance report (with explanations) from the run of the included db_bench program. The results are somewhat noisy, but should be enough to get a ballpark performance estimate.

Each "fillsync" operation costs much less (0.3 millisecond) than a disk seek (typically 10 milliseconds). We suspect that this is because the hard disk itself is buffering the update in its memory and responding before the data has been written to the platter. This may or may not be safe based on whether or not the hard disk has enough power to save its memory in the event of a power failure.

Cheers!

Today let's look into Key-Value storage library called "LevelDB".

LevelDB is a fast key-value storage library written at Google that

provides an ordered mapping from string keys to string values. Leveldb

is based on LSM (Log-Structured Merge-Tree) and uses SSTable and

MemTable for the database implementation. It's written in C++ and

availabe under BSD license. LevelDB treats key and value as arbitrary

byte arrays and stores keys in ordered fashion. It uses snappy

compression for the data compression. Write and Read are concurrent for

the db, but write performs best with single thread whereas Read scales

with number of cores

Java package built with JNI wrapper available for LevelDB - Stable version (Forked for further development, updates may not be reflected on public GIT, because of security Issues).

Features:

- Keys and values are arbitrary byte arrays.

- Data is stored sorted by key.

- Callers can provide a custom comparison function to override the sort order.

- The basic operations are Put(key,value), Get(key), Delete(key).

- Multiple changes can be made in one atomic batch.

- Users can create a transient snapshot to get a consistent view of data.

- Forward and backward iteration is supported over the data.

- Data is automatically compressed using the Snappy compression library.

- External activity (file system operations etc.) is relayed through a virtual interface so users can customize the operating system interactions.

- Detailed documentation about how to use the library is included with the source code.

Limitations:

- This is not a SQL database. It does not have a relational data model, it does not support SQL queries, and it has no support for indexes.

- Only a single process (possibly multi-threaded) can access a particular database at a time.

- There is no client-server support builtin to the library. An application that needs such support will have to wrap their own server around the library.

Here is a performance report (with explanations) from the run of the included db_bench program. The results are somewhat noisy, but should be enough to get a ballpark performance estimate.

Setup

We use a database with a million entries. Each entry has a 16 byte key, and a 100 byte value. Values used by the benchmark compress to about half their original size.LevelDB: version 1.1 Date: Sun May 1 12:11:26 2011 CPU: 4 x Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz CPUCache: 4096 KB Keys: 16 bytes each Values: 100 bytes each (50 bytes after compression) Entries: 1000000 Raw Size: 110.6 MB (estimated) File Size: 62.9 MB (estimated)

Write performance

The "fill" benchmarks create a brand new database, in either sequential, or random order. The "fillsync" benchmark flushes data from the operating system to the disk after every operation; the other write operations leave the data sitting in the operating system buffer cache for a while. The "overwrite" benchmark does random writes that update existing keys in the database.fillseq : 1.765 micros/op; 62.7 MB/s fillsync : 268.409 micros/op; 0.4 MB/s (10000 ops) fillrandom : 2.460 micros/op; 45.0 MB/s overwrite : 2.380 micros/op; 46.5 MB/sEach "op" above corresponds to a write of a single key/value pair. I.e., a random write benchmark goes at approximately 400,000 writes per second.

Each "fillsync" operation costs much less (0.3 millisecond) than a disk seek (typically 10 milliseconds). We suspect that this is because the hard disk itself is buffering the update in its memory and responding before the data has been written to the platter. This may or may not be safe based on whether or not the hard disk has enough power to save its memory in the event of a power failure.

Read performance

We list the performance of reading sequentially in both the forward and reverse direction, and also the performance of a random lookup. Note that the database created by the benchmark is quite small. Therefore the report characterizes the performance of leveldb when the working set fits in memory. The cost of reading a piece of data that is not present in the operating system buffer cache will be dominated by the one or two disk seeks needed to fetch the data from disk. Write performance will be mostly unaffected by whether or not the working set fits in memory.readrandom : 16.677 micros/op; (approximately 60,000 reads per second) readseq : 0.476 micros/op; 232.3 MB/s readreverse : 0.724 micros/op; 152.9 MB/sLevelDB compacts its underlying storage data in the background to improve read performance. The results listed above were done immediately after a lot of random writes. The results after compactions (which are usually triggered automatically) are better.

readrandom : 11.602 micros/op; (approximately 85,000 reads per second) readseq : 0.423 micros/op; 261.8 MB/s readreverse : 0.663 micros/op; 166.9 MB/sSome of the high cost of reads comes from repeated decompression of blocks read from disk. If we supply enough cache to the leveldb so it can hold the uncompressed blocks in memory, the read performance improves again:

readrandom : 9.775 micros/op; (approximately 100,000 reads per second before compaction) readrandom : 5.215 micros/op; (approximately 190,000 reads per second after compaction)

This Article may help someone in the future.

Cheers!

Courtesy goes to "LevelDB" wikis

Dev Tip: My Experience of using Compression Library used by Google

Hi Folks,

While I was doing System Architecture and Development for a brand new Product of EduAlert - "Learn360". I went through a serious of challenges, lets go through one interesting challenge.

Note: I am not going to explain the product architecture; since the product rights goes to EduAlert only. Technical key challenges I faced during the development was the only concern.

A little introduction about the product "Learn360":

'A proprietary learning platform that provides easy, coherent access to networks of people and resources'.

New generation LMS (Learning Management System) requires large amount of meta-data to be stored and to be processed. Since most of the contents pushing through LMS in Video/Textual format.

Here the problem is "What's the best way to store the redundant data on File System or in a Database without compromising efficiency?"

I thought of implementing a Dedupe File system at file system level by adding a custom linux kernel Module built with Fuse API wrapper. I written a simple python program using Fuse API, that works great. But lacks File Locking mechanism, severe performance issues. Since its a user level Kernel module, IO times are high. Hence its not a advisable one for me.

My thoughts went in different angle, "What about incorporating zlib library and compress / Decompress data during IO?". Finally I decided not to use this approach since the compression overhead is too high. Since our system is highly dependent on IO (metadata).

I thought of putting Memcached the frequently used decompressed metadata. But still the overhead is high and far better than the previous approach.

So my primary focus was to find an efficient way to compress and decompress metadata with less overhead. After a few hours of literature survey I found a library "Snappy" used by Google and other companies. I incorporated this module into our the core framework.

This library may help someone in the near future, those who are working on similar problem related to compression.

More about Snappy:

Snappy is a compression/decompression library. It does not aim for maximum compression, or compatibility with any other compression library; instead, it aims for very high speeds and reasonable compression. For instance, compared to the fastest mode of zlib, Snappy is an order of magnitude faster for most inputs, but the resulting compressed files are anywhere from 20% to 100% bigger. On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more.

Snappy is widely used inside Google, in everything from BigTable and MapReduce to our internal RPC systems. (Snappy has previously been referred to as “Zippy” in some presentations and the likes.)

Supported libraries are:

Snappy is written in C++, but C bindings are included, and several bindings to other languages are maintained by third parties:

Cheers!

While I was doing System Architecture and Development for a brand new Product of EduAlert - "Learn360". I went through a serious of challenges, lets go through one interesting challenge.

Note: I am not going to explain the product architecture; since the product rights goes to EduAlert only. Technical key challenges I faced during the development was the only concern.

A little introduction about the product "Learn360":

'A proprietary learning platform that provides easy, coherent access to networks of people and resources'.

New generation LMS (Learning Management System) requires large amount of meta-data to be stored and to be processed. Since most of the contents pushing through LMS in Video/Textual format.

Here the problem is "What's the best way to store the redundant data on File System or in a Database without compromising efficiency?"

I thought of implementing a Dedupe File system at file system level by adding a custom linux kernel Module built with Fuse API wrapper. I written a simple python program using Fuse API, that works great. But lacks File Locking mechanism, severe performance issues. Since its a user level Kernel module, IO times are high. Hence its not a advisable one for me.

My thoughts went in different angle, "What about incorporating zlib library and compress / Decompress data during IO?". Finally I decided not to use this approach since the compression overhead is too high. Since our system is highly dependent on IO (metadata).

I thought of putting Memcached the frequently used decompressed metadata. But still the overhead is high and far better than the previous approach.

So my primary focus was to find an efficient way to compress and decompress metadata with less overhead. After a few hours of literature survey I found a library "Snappy" used by Google and other companies. I incorporated this module into our the core framework.

This library may help someone in the near future, those who are working on similar problem related to compression.

More about Snappy:

Snappy is a compression/decompression library. It does not aim for maximum compression, or compatibility with any other compression library; instead, it aims for very high speeds and reasonable compression. For instance, compared to the fastest mode of zlib, Snappy is an order of magnitude faster for most inputs, but the resulting compressed files are anywhere from 20% to 100% bigger. On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more.

Snappy is widely used inside Google, in everything from BigTable and MapReduce to our internal RPC systems. (Snappy has previously been referred to as “Zippy” in some presentations and the likes.)

Supported libraries are:

Snappy is written in C++, but C bindings are included, and several bindings to other languages are maintained by third parties:

- C89 port

- Common Lisp

- Erlang: esnappy, snappy-erlang-nif

- Go

- Haskell

- Java: JNI wrapper, native reimplementation

- Node.js

- Perl

- PHP

- Python

- Ruby

Cheers!

14 November 2012

Dev Tip: Application Server Profiler for Java & .NET

Hi Folks,

I am working on application profiling for Edualert Applications, developed using Spring running on JBoss App Server. I found an interesting solution for this. Likely someone may benefit this product.

AppDynamics Lite is the very first free product designed for troubleshooting Java/.NET performance while getting full visibility in production environments.

Supported Java Versions:

AppDynamics Lite consists of a Viewer and Agent.

Enjoy the Series!

I am working on application profiling for Edualert Applications, developed using Spring running on JBoss App Server. I found an interesting solution for this. Likely someone may benefit this product.

AppDynamics Lite is the very first free product designed for troubleshooting Java/.NET performance while getting full visibility in production environments.

Supported Java Versions:

- Java Virtual Machines:

- Sun Java 1.5, 1.6, 1.7IBM JVM 1.5,1.6, 1.7JRockit 1.5,1.6., 1.7

- Java Application Servers:

- WebsphereWeblogicJBossTomcatGlassfishJettyOSGi

- Java Programming Stacks:

- SpringStrutsJSFWeb ServicesEJBsServletsJSPsJPAHibernateJMS

- Supported .NET Versions:

- .NET Framework 2.03.03.54.0

- ASP .NET MVC 2 and 3

- Supported IIS Versions:

- Microsoft IIS 6.0 7.07.5Microsoft IIS Express 7.x

- Supported Versions of Windows operating systems in both 32- and 64-bit:

- Windows XP, 2003, Vista, 2008, 2008 R2, Windows 7

What's the Difference Between a Profiler and AppDynamics

| WHEN TO USE A PROFILER | WHEN TO USE APPDYNAMICS LITE |

| You need to troubleshoot high CPU usage and high memory usage | You need to troubleshoot slow response times, slow SQL, high error rates, and stalls |

| Your environment is Development or QA | Your environment is Production or performance load-test |

| 15-20% overhead is okay | You can't afford more than 2% overhead |

AppDynamics Lite consists of a Viewer and Agent.

AppDynamics Lite Viewer System Requirements

The AppDynamics Lite Viewer is a lightweight Java process. It has an embedded web server that serves a Flash-based browser interface.

Hardware Requirements

- Disk space (install footprint): 15 MB Java, 50 MB .NET

- Disk space (run time footprint): 100 MB (data retention period = 2 hours)

- Minimum recommended hardware: 2 GB RAM, Single CPU, 1 disk

- Memory footprint: 100 MB

Operating System Requirements

AppDynamics Lite runs on any operating system that supports a JVM 1.5 runtime or .Net Framework (2.0, 3.0, 3.5, 4.0) and a web browser with Flash.

Software Requirements

- One of the following browsers:

- Mozilla FireFox v 3.x, 4.x, 5.0

- Internet Explorer 6.x, 7.x, 8.x, 9.0.

- Safari 4.x, 5.x

- Google Chrome 10.x, 11.x, 12.x

- Adobe Flash Player 10 or above for your browser (get the latest version)

- For better navigation, size your browser window to a minimum of 1020x450 pixels.

AppDynamics Lite Agent System Requirements

The Agent is a lightweight process that runs in your JVM or a module that runs in your CLR.

Software Requirements

- Memory footprint:

- 10 MB Java

- 20-30 MB .NET

- For .NET you need:

- IIS 6 or newer

- .NET Framework 2.0, 3.0, 3.5, or 4.0

Lets focus on Java, Since I am working on Java platform.

Get Started With AppDynamics Lite for Java

Getting started with AppDynamics Lite is fast and easy! Here's how:

1. Ensure that your system meets the AppDynamics Lite System Requirements.

2. Download and extract the latest version of AppDynamicsLite.zip. After you extract AppDynamicsLite.zip you will have two zip files, LiteViewer.zip for the Viewer and AppServerAgentLite.zip for the Agent.

3. Read about the Runtime Options such as authentication and port configuration.

4. Follow the simple installation procedure shown in the following illustration. Alternatively, follow the detailed step-by-step instructions for Installing the Viewer and Installing the Application Server Agent.

Enjoy the Series!

Cloud Tip: Cloudify Paas Stack Introduction

Hi Folks,

Today Lets discuss on Installation, Sample deployment.

Cloudify Installation:

Cloudify Enterprise Deployment:

Download it from:

http://www.cloudifysource.org/downloads/get_cloudify

Dont forgot to look into the getting started guide:

http://www.cloudifysource.org/guide/2.2/qsg/quick_start_guide_helloworld

Enjoy the cloud Series!

Today Lets discuss on Installation, Sample deployment.

Cloudify - The Open Source PaaS Stack

Gain the on-demand availability, rapid deployment, and agility benefits of moving your app to the cloud, with our open source PaaS stack.

All with no code changes, no lock-in, and full control.

Cloudify Enterprise Deployment:

Download it from:

http://www.cloudifysource.org/downloads/get_cloudify

Dont forgot to look into the getting started guide:

http://www.cloudifysource.org/guide/2.2/qsg/quick_start_guide_helloworld

Enjoy the cloud Series!

Cloud Tip: Try scaling your website using aiCache

Hi Folks, this might be an easy solution for web master they don't have much experience in cloud computing; want to scale the website!

aiCache Web Application Acceleration is available on the Amazon Elastic Compute Cloud, EC2, on a time and transfer basis; there are no sign-up or license fees. We provide two free hours of installation support and can provide paid professional services to assist in general cloud architecture.

aiCache creates a better user experience by increasing the speed scale and stability of your web-site. Test aiCache acceleration for free. No sign-up required.

Try this:

I found an interesting feature that supports dynamic sites:

aiCache Web Application Acceleration is available on the Amazon Elastic Compute Cloud, EC2, on a time and transfer basis; there are no sign-up or license fees. We provide two free hours of installation support and can provide paid professional services to assist in general cloud architecture.

aiCache creates a better user experience by increasing the speed scale and stability of your web-site. Test aiCache acceleration for free. No sign-up required.

Try this:

http://aicache.com/deploy

I found an interesting feature that supports dynamic sites:

Dynamic Cloud Caches™ – The Evolution of the CDN

What do you get when you take the reach of the Global Amazon Web Services Network, combine it with Global Low latency load Balancing from Amazon Route 53 and terminate on the most intelligent edge device aiCache?

We call it Dynamic Cloud Caches, or as we hope you will think of it, the CDN for grown-ups. Manage your own content distribution to build scalable, mass personalized web offerings.

- Dynamic Site Acceleration.

- Cheaper Content Delivery without contracts or minimums.

- Realtime monitoring, reporting and alerting.

- SSL acceleration.

- Admin fallback to local or remote site.

Today regional backbones are not congested, websites are dynamic and users are on broadband. The benefits offered by the CDN are primarily limited to large file distribution (video). Superior performance for modern sites can be achieved with a few dynamic endpoints that reduce geographic latency.

Modern websites are generated by the web, app, and database tiers, personalized to the user. These sites are served much faster by intelligent caches that recognize dynamic content and can deliver it selectively, even if only for a few seconds.

Deploying dynamic caches, on a cloud like Amazon Web Services, in 2 to 5 locations worldwide and routing your users to the nearest cache, is faster than a CDN. Even better, its as a fraction of the cost, with greater control, and real time data that lets you know exactly what your end user is experiencing.

- OBJECTIVE

Faster content delivered using Global DNS to route users to the nearest geographic aiCache instance. - AVAILABILITY

East Coast (USA)

West Coast (USA)

Europe (Ireland)

Asia (Singapore)

Asia (Tokyo) - FEATURES

Dynamic Content Caching

Real-time monitoring

Distributed Load Balancing - RESULTS

Happier Customers

Less Cost

Flexibility and Control

There are no minimums, contracts or commitments. Demo environments are easily deployed and professional services support is available. Non-cloud based based endpoints can be configured and traffic can be set to share or bleed over to a CDN for ease of transition and redundancy.

One can host on AWS or Rightscale Cloud.

Enjoy the series!

Dev Tip: NoSQL Databases comparison (Top 8 NoSQL DBs)

Cassandra vs MongoDB vs CouchDB vs Redis vs Riak vs HBase vs Membase vs Neo4j comparison

While SQL databases are insanely useful tools, their monopoly of ~15 years is coming to an end. And it was just time: I can't even count the things that were forced into relational databases, but never really fitted them.

But the differences between NoSQL databases are much bigger than it ever was between one SQL database and another. This means that it is a bigger responsibility on software architects to choose the appropriate one for a project right at the beginning.

In this light, here is a comparison of Cassandra, Mongodb, CouchDB, Redis, Riak, Membase, Neo4j andHBase:

MongoDB

- Written in: C++

- Main point: Retains some friendly properties of SQL. (Query, index)

- License: AGPL (Drivers: Apache)

- Protocol: Custom, binary (BSON)

- Master/slave replication (auto failover with replica sets)

- Sharding built-in

- Queries are javascript expressions

- Run arbitrary javascript functions server-side

- Better update-in-place than CouchDB

- Uses memory mapped files for data storage

- Performance over features

- Journaling (with --journal) is best turned on

- On 32bit systems, limited to ~2.5Gb

- An empty database takes up 192Mb

- GridFS to store big data + metadata (not actually an FS)

- Has geospatial indexing

Best used: If you need dynamic queries. If you prefer to define indexes, not map/reduce functions. If you need good performance on a big DB. If you wanted CouchDB, but your data changes too much, filling up disks.

For example: For most things that you would do with MySQL or PostgreSQL, but having predefined columns really holds you back.

Riak (V1.0)

- Written in: Erlang & C, some Javascript

- Main point: Fault tolerance

- License: Apache

- Protocol: HTTP/REST or custom binary

- Tunable trade-offs for distribution and replication (N, R, W)

- Pre- and post-commit hooks in JavaScript or Erlang, for validation and security.

- Map/reduce in JavaScript or Erlang

- Links & link walking: use it as a graph database

- Secondary indices: but only one at once

- Large object support (Luwak)

- Comes in "open source" and "enterprise" editions

- Full-text search, indexing, querying with Riak Search server (beta)

- In the process of migrating the storing backend from "Bitcask" to Google's "LevelDB"

- Masterless multi-site replication replication and SNMP monitoring are commercially licensed

Best used: If you want something Cassandra-like (Dynamo-like), but no way you're gonna deal with the bloat and complexity. If you need very good single-site scalability, availability and fault-tolerance, but you're ready to pay for multi-site replication.

For example: Point-of-sales data collection. Factory control systems. Places where even seconds of downtime hurt. Could be used as a well-update-able web server.

CouchDB (V1.1.1)

- Written in: Erlang

- Main point: DB consistency, ease of use

- License: Apache

- Protocol: HTTP/REST

- Bi-directional (!) replication,

- continuous or ad-hoc,

- with conflict detection,

- thus, master-master replication. (!)

- MVCC - write operations do not block reads

- Previous versions of documents are available

- Crash-only (reliable) design

- Needs compacting from time to time

- Views: embedded map/reduce

- Formatting views: lists & shows

- Server-side document validation possible

- Authentication possible

- Real-time updates via _changes (!)

- Attachment handling

- thus, CouchApps (standalone js apps)

- jQuery library included

Best used: For accumulating, occasionally changing data, on which pre-defined queries are to be run. Places where versioning is important.

For example: CRM, CMS systems. Master-master replication is an especially interesting feature, allowing easy multi-site deployments.

Redis (V2.4)

- Written in: C/C++

- Main point: Blazing fast

- License: BSD

- Protocol: Telnet-like

- Disk-backed in-memory database,

- Currently without disk-swap (VM and Diskstore were abandoned)

- Master-slave replication

- Simple values or hash tables by keys,

- but complex operations like ZREVRANGEBYSCORE.

- INCR & co (good for rate limiting or statistics)

- Has sets (also union/diff/inter)

- Has lists (also a queue; blocking pop)

- Has hashes (objects of multiple fields)

- Sorted sets (high score table, good for range queries)

- Redis has transactions (!)

- Values can be set to expire (as in a cache)

- Pub/Sub lets one implement messaging (!)

Best used: For rapidly changing data with a foreseeable database size (should fit mostly in memory).

For example: Stock prices. Analytics. Real-time data collection. Real-time communication.

HBase (V0.92.0)

- Written in: Java

- Main point: Billions of rows X millions of columns

- License: Apache

- Protocol: HTTP/REST (also Thrift)

- Modeled after Google's BigTable

- Uses Hadoop's HDFS as storage

- Map/reduce with Hadoop

- Query predicate push down via server side scan and get filters

- Optimizations for real time queries

- A high performance Thrift gateway

- HTTP supports XML, Protobuf, and binary

- Cascading, hive, and pig source and sink modules

- Jruby-based (JIRB) shell

- Rolling restart for configuration changes and minor upgrades

- Random access performance is like MySQL

- A cluster consists of several different types of nodes

Best used: Hadoop is probably still the best way to run Map/Reduce jobs on huge datasets. Best if you use the Hadoop/HDFS stack already.

For example: Analysing log data.

Neo4j (V1.5M02)

- Written in: Java

- Main point: Graph database - connected data

- License: GPL, some features AGPL/commercial

- Protocol: HTTP/REST (or embedding in Java)

- Standalone, or embeddable into Java applications

- Full ACID conformity (including durable data)

- Both nodes and relationships can have metadata

- Integrated pattern-matching-based query language ("Cypher")

- Also the "Gremlin" graph traversal language can be used

- Indexing of nodes and relationships

- Nice self-contained web admin

- Advanced path-finding with multiple algorithms

- Indexing of keys and relationships

- Optimized for reads

- Has transactions (in the Java API)

- Scriptable in Groovy

- Online backup, advanced monitoring and High Availability is AGPL/commercial licensed

Best used: For graph-style, rich or complex, interconnected data. Neo4j is quite different from the others in this sense.

For example: Social relations, public transport links, road maps, network topologies.

Cassandra

- Written in: Java

- Main point: Best of BigTable and Dynamo

- License: Apache

- Protocol: Custom, binary (Thrift)

- Tunable trade-offs for distribution and replication (N, R, W)

- Querying by column, range of keys

- BigTable-like features: columns, column families

- Has secondary indices

- Writes are much faster than reads (!)

- Map/reduce possible with Apache Hadoop

- All nodes are similar, as opposed to Hadoop/HBase

Best used: When you write more than you read (logging). If every component of the system must be in Java. ("No one gets fired for choosing Apache's stuff.")

For example: Banking, financial industry (though not necessarily for financial transactions, but these industries are much bigger than that.) Writes are faster than reads, so one natural niche is real time data analysis.

Membase

- Written in: Erlang & C

- Main point: Memcache compatible, but with persistence and clustering

- License: Apache 2.0

- Protocol: memcached plus extensions

- Very fast (200k+/sec) access of data by key

- Persistence to disk

- All nodes are identical (master-master replication)

- Provides memcached-style in-memory caching buckets, too

- Write de-duplication to reduce IO

- Very nice cluster-management web GUI

- Software upgrades without taking the DB offline

- Connection proxy for connection pooling and multiplexing (Moxi)

Best used: Any application where low-latency data access, high concurrency support and high availability is a requirement.

For example: Low-latency use-cases like ad targeting or highly-concurrent web apps like online gaming (e.g. Zynga).

Courtesy: Kristóf Kovács

Courtesy: Kristóf Kovács

Dev Tip: MongoDB scalability Animation

Hi Folks, today lets look into

How Mongo DB Scales with an animated Video and also included a support animated discussion on SQL vs NoSQL for better understanding.

Mongo DB Scalable Animated Video:

SQL vs NOSQL Animated Slides:

Enjoy the Series!

How Mongo DB Scales with an animated Video and also included a support animated discussion on SQL vs NoSQL for better understanding.

Mongo DB Scalable Animated Video:

SQL vs NOSQL Animated Slides:

Enjoy the Series!

09 November 2012

Dev Tip: How to create HTML5 based Data Centric Web Apps in 10 Minutes

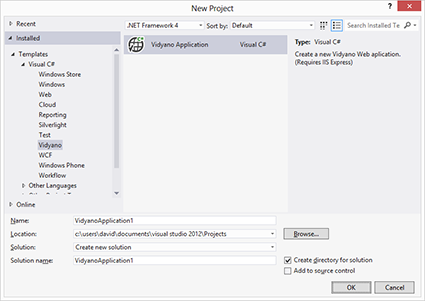

Today I found one interesting Framework "Vidyano", based on C# .NET. Its kickstart project, one can install Vidyano along with Visual Studio 2010/2012 and IIS 7.5 Express.

How it Works:

How it Works:

1. DECIDE WHICH DATABASE YOU WANT FOR the WEB APPLICATION

Do you already have that particular movie in your private collection? How many items of that specific product do you currently have in stock? Which consultants are readily available for a given assignment? There is a lot of information that you may have stored in a database on a firewalled corporate server or even on a desktop pc at home. Information that you might want to share with other people or manage yourself when you are on the road, far away from your private database. Now it's easy to do just that and a lot more in just a few minutes.

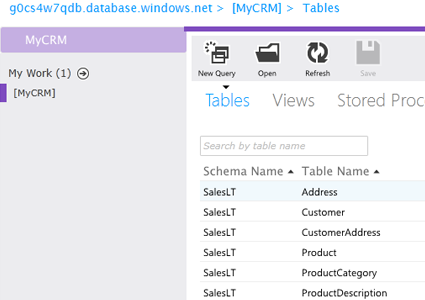

Select any of your databases that has a compatible Entity Framework provider such as SQL Server or SQL (Azure) Database.

2. CREATE A VIDYANO WEB APPLICATION USING VISUAL STUDIO

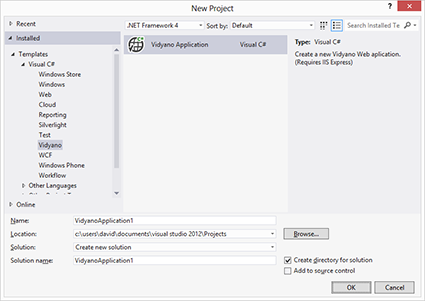

Download the Vidyano extension from the Visual Studio Gallery or via the Extensions and Updates dialog in Visual Studio 2010 or 2012. Create a new Vidyano web application and select your target database. Vidyano will setup your web application and analyze your database.

Launch your application and start adding functionalities in real-time! Vidyano provides you with data triggers, a built-in data validation engine and custom actions that allow you to make your application behave exactly the way you want. You have the option to write code in Visual Studio using C# or in your browser using server-side JavaScript.

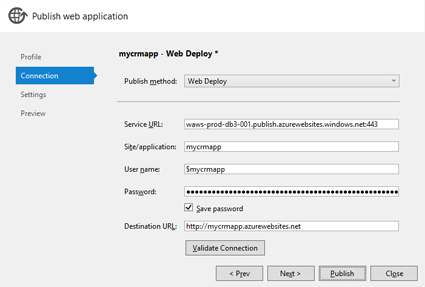

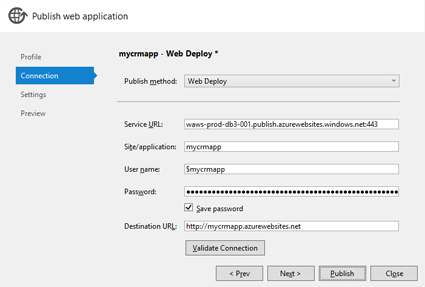

3. DEPLOY YOUR VIDYANO WEB APPLICATION TO IIS OR WINDOWS AZURE

Before anyone is able to access your web application from the outside world, you will need to deploy it to a public server that has access to your database.

Your web application can easily be hosted on any Internet Information Services (IIS) server you already have or you can one-click publish it to Windows Azure Websites, allowing you to host your web application for free and scale out when needed. Your web application is also compatible with Windows Azure Cloud Services and can be set to automatically scale in or out according to your schedule.

4. PROVIDE SECURE ACCESS TO THE WEB APPLICATION FROM ANY DEVICE

You now have a full featured HTML5 web application up and running that allows anyone you authorize, access to your data from any device at any time and from anywhere.

Take advantage of built-in features such as easily adding additional custom or data validation logic at runtime, providing custom templates that make your data stand out, managing users and fine tuning security at any moment, translating your application into over 30 languages with a single click, providing real-time data other application such as to Microsoft Excel in less than 30 seconds, and a lot more!

Subscribe to:

Posts (Atom)